Recently I had a relatively slow performing source query within a for loop. The for loop ran approximately 12 times each time running this query. I solved the problem by calling the query once, caching the results, and performing look ups instead of executing the query again.

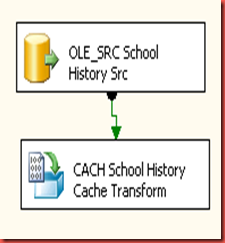

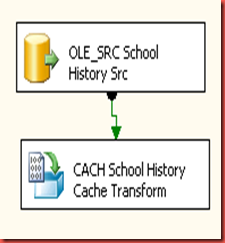

Here’s the control flow

Going into DFL Cache Data

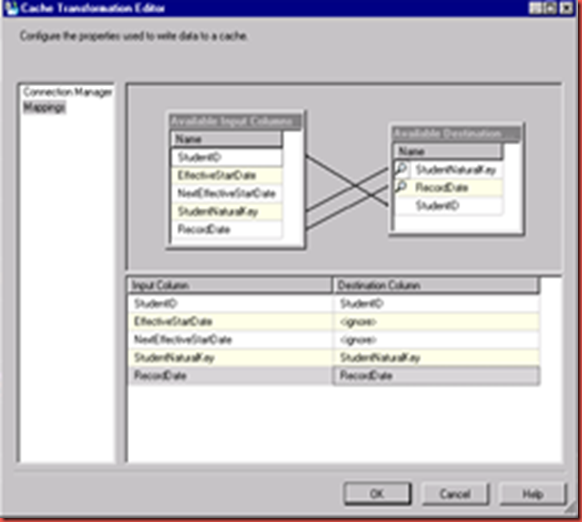

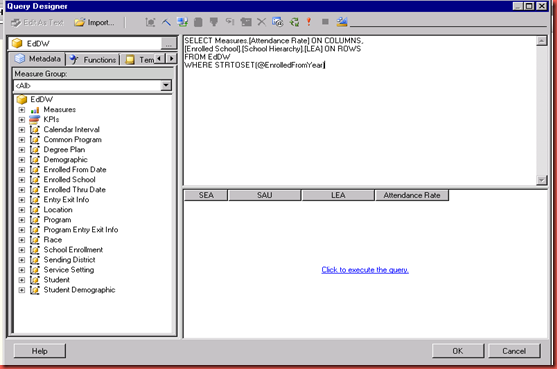

In order to perform a lookup that returns all of the relevant rows the query for OLE_SRC School History Src needs to have a unique identifier.

SELECT ROW_NUMBER() OVER (ORDER BY RAND()) ID, *

FROM ComplexQuery

Since I’m going to use year as the parameter in the for loop I’m placing the Cache Connection Manager index on ID and YearID.

Now that I’ve filled the cache I’m going to loop by year over the dataflow DFL Import DimSchool

Here’s DFL Import DimSchool

Next generate a list of numbers with the for loop variables. To do this create a variable called SQLCommand. Set EvaluateAsExpression to True with the expression as

"WITH Num1 (n) AS (SELECT 1 UNION ALL SELECT 1),

Num2 (n) AS (SELECT 1 FROM Num1 AS X, Num1 AS Y),

Num3 (n) AS (SELECT 1 FROM Num2 AS X, Num2 AS Y),

Num4 (n) AS (SELECT 1 FROM Num3 AS X, Num3 AS Y),

Num5 (n) AS (SELECT 1 FROM Num4 AS X, Num4 AS Y),

Num6 (n) AS (SELECT 1 FROM Num5 AS X, Num5 AS Y),

Nums (n) AS (SELECT ROW_NUMBER() OVER(ORDER BY n) FROM Num6)

SELECT n ID, " + (DT_WSTR, 4) @[User::_Year] + " YearID

FROM Nums

WHERE n <= 100000"

@{User::_Year] is the variable used in the for loop so the value of YearID changes with each iteration.

Choose Data access mode as SQL command from variable and select SQLCommand as the variable name. It results in the following query.

WITH Num1 (n) AS (SELECT 1 UNION ALL SELECT 1),

Num2 (n) AS (SELECT 1 FROM Num1 AS X, Num1 AS Y),

Num3 (n) AS (SELECT 1 FROM Num2 AS X, Num2 AS Y),

Num4 (n) AS (SELECT 1 FROM Num3 AS X, Num3 AS Y),

Num5 (n) AS (SELECT 1 FROM Num4 AS X, Num4 AS Y),

Num6 (n) AS (SELECT 1 FROM Num5 AS X, Num5 AS Y),

Nums (n) AS (SELECT ROW_NUMBER() OVER(ORDER BY n) FROM Num6)

SELECT n ID, 2000 YearID

FROM Nums

WHERE n <= 100000

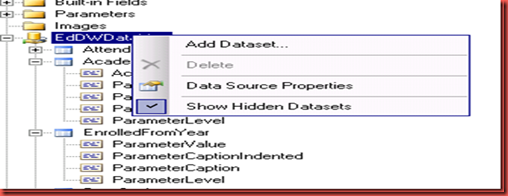

and the following output

ID YearID

The lookup is performed on the ID and YearID

I now have the same records I would’ve gotten by executing the query using the YearID as a parameter.