There are times when you have a hybrid environment and it’s not feasible to join Azure to your current active directory and you need a solution that allows Azure access to your on premise environment. While it’s straight forward to access a sql server instance residing on an Azure virtual machine it requires more effort for Azure to access your on premise sql server. One solution is a point to site VPN.

Configuring an on premise instance of sql server to be accessible from an azure vm requires the following

- Azure Point to Site VPN

- SQL Server Configured for Remote Access

- FileTable Configured for Remote Access

Microsoft gives in depth instructions for setting up a point to site VPN here:

https://msdn.microsoft.com/en-us/library/azure/dn133792.aspx

Once you’ve set up the VPN connect to the your virtual network. This blog will use MarinerNet.

We’ll need to get the internal ip address of the on premise machine. Open Network and Sharing and click on MarinerNet to bring up the Status dialog.

Click details in the status box and retreive the internal ip address assigned by Azure.

Configure On Premise Sql Server for Remote Access:

In order to access the machine from Azure you’ll need to allow remote connections for the sql server instance and open the port that sql server is listening on.

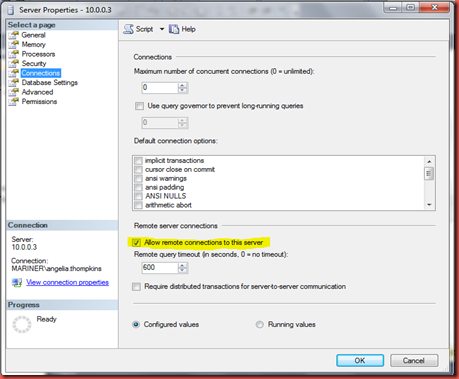

To allow remote connections open SSMS and open server properties. Click connection and check Allow remote connections.

Now that we’ve allowed remote connections we will need to allow the Azure VM through the firewall. This can be done by opening up port 1433.

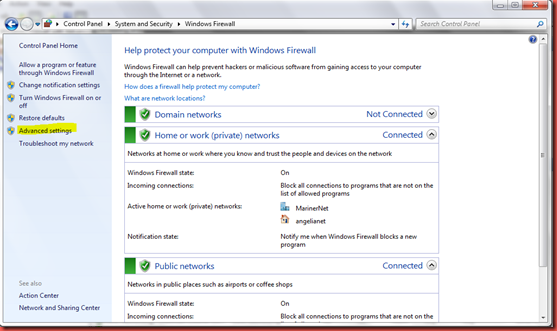

Go to Control Panel\System and Security\Windows Firewall and click Advanced Settings

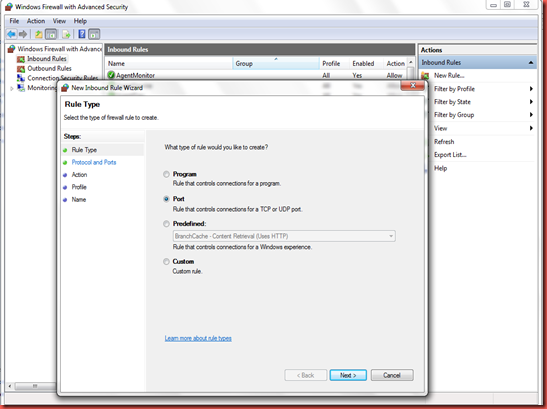

In Windows Firewall and advanced security click Inbound Rules and then under Actions New Rule. The dialog below will appear for rule type select port.

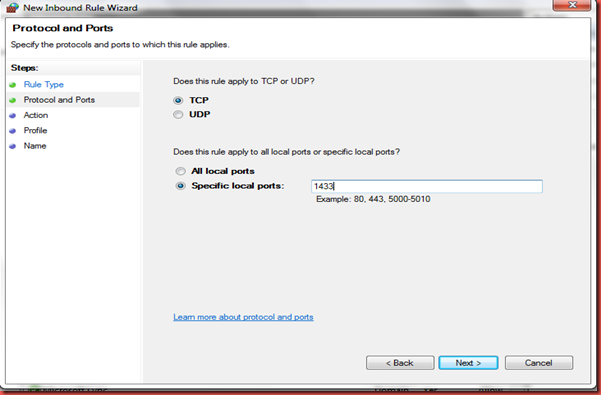

Then for protocol and ports enter a specific local port which is 1433.

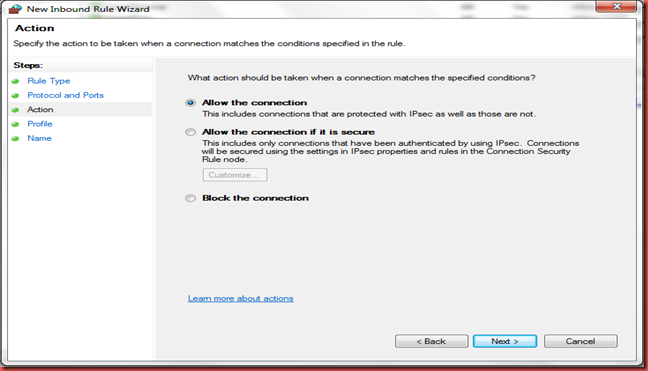

Next select allow the connection when a machine attempts to connect on port 1433.

The rule will always apply

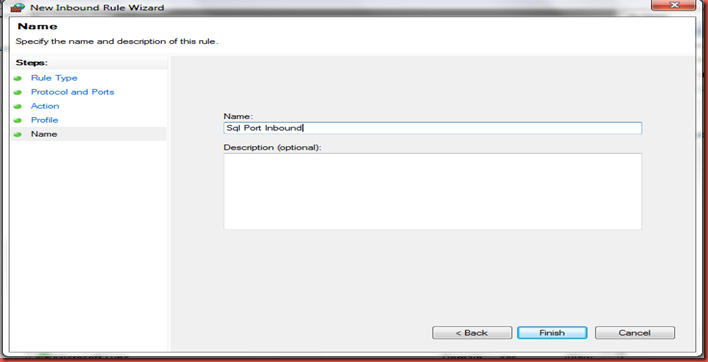

Now give it a name. I used Sql Port Inbound

Connectivity has now been set up between the Azure VM and the on Premises Sql Server.

Using the internal ip address obtained earlier and sql server authentication because the Azure VM and on Premises Sql Server are in two different domains we can connect to the On Premises Sql Server from the Azure VM.

Now that we’re able to connect to sql server from Azure let’s also add access to the file tables on the on premise sql server instance.

This requires allowing remote access to filestreams and creating a local user on the on premise sql server.

On the FileStream tab of Sql Server properties in Sql Server Configuration Manager check the Allow remote clients access box.

Create a local user and give them access to the FileTable in Sql Server.

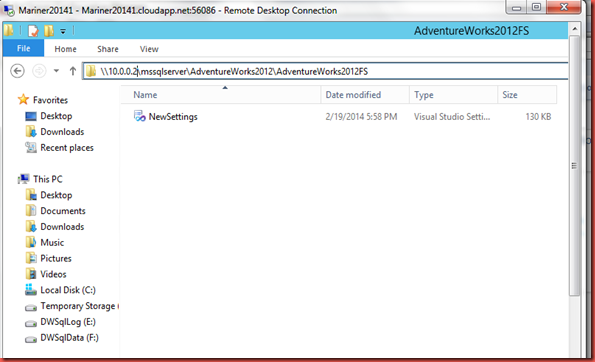

All you need to do is plug in the URL

\\10.0.0.2\mssqlserver\AdventureWorks2012\AdventureWorks2012FS

and enter the local user credentials when prompted.

The main drawback to this approach is that the Internal IP Address can change each time the vpn connection is reestablished. One way around this is a VPN for each machine for example create MarinerNet1 for Sql Server1 that only has 10.0.0.2 as a possible address and MarinerNet2 for Sql Server2 that only has 10.0.0.3 as a possible address.